Earlier this month, Tolia responded to the Express article with a blogpost on Nextdoor.com outlining the company’s plans to curb racial profiling — including potential new training initiatives and changes to the site’s guidelines. Today at the co-working space Impact Hub Oakland, Tolia and three other Nextdoor representatives met with five members of Neighbors for Racial Justice, which invited me to sit in on the meeting. The activists — including Upper Dimond resident Shikira Porter, who I featured prominently in my piece — presented Nextdoor with a number of proposals aimed at eliminating racial profiling on the site. In addition to Tolia, the meeting included Kelsey Grady, Nextdoor’s head of communications; Gordon Strause, director of neighborhood operations; and Maryam Mohit, director of product.

[jump] Tolia told the group that Nextdoor plans to add a “racial profiling button” to the site, which means users will be able to flag posts for racial profiling. Currently, users can flag comments that they believe are inappropriate or abusive, but there’s no way to specify racial profiling. “We want to create that very specific signal for us,” Tolia said. In the current system, when users flag a post for any reason, neighborhood “leads” — who are volunteer citizen moderators — can review the post and decide whether it violates Nextdoor’s guidelines, which prohibit discriminatory posts and profiling. The problem, according to Neighbors for Racial Justice, is that the leads sometimes do not take those concerns sincerely, and, on the contrary, have even gone so far as to censor posts calling out racial profiling in some Oakland neighborhoods. Users can reach out to the company directly if they are not satisfied with the lead’s response, but some have said that this process is difficult.

In addition to the new racial profiling button, Grady said after the meeting that the company is committed to revising the guidelines to better address concerns about racial profiling, but has not yet decided on the specifics of those rewrites. The guidelines currently say: “Racial profiling is the act of making assumptions about a person’s character or intentions based on their appearance or identity rather than their actions.”

Today, Neighbors for Racial Justice proposed a number of more substantive initiatives — changes that Tolia and the others agreed to consider. The group suggested that Nextdoor adopt clear restrictions on how people post about crime, including prohibiting the use of racially offensive “code words,” such as the “AA” label — which, I have seen, people frequently use to describe “suspicious” African-American people on Nextdoor’s Oakland pages. More broadly, the group requested that Nextdoor consider banning posts that describe “suspicions,” since those are subject to people’s implicit racial biases, and instead direct users to only post crime and safety warnings when they witness actual illegal behavior.

Within those crime posts, Nextdoor should ban descriptions of suspects that are vague, such as “young Black man,” the group said. As one of the activists, Bedford Palmer, wrote in a handout he gave the Nextdoor reps today: “These descriptions are directly responsible for the harassment of neighbors that ‘fit the description,’ and places them in danger of negative police interactions.”

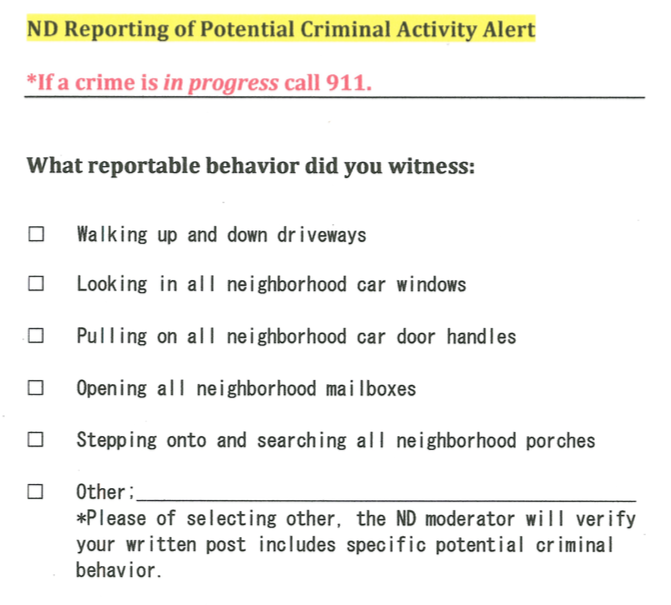

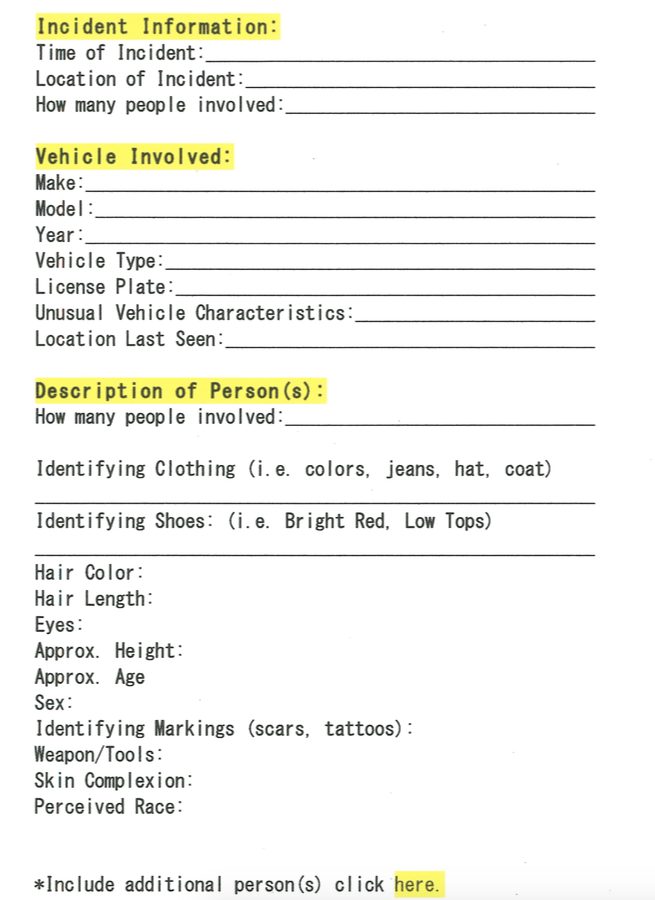

And if users do want to post about a crime, they should have to fill out a specific form, according to Neighbors for Racial Justice. Porter brought this document to the meeting as an example:

In other words, members would have to specify the criminal behavior they witnessed and describe a wide range of physical characteristics — which can include “perceived race.” If users can’t specify those other descriptors — meaning if they are only going to post “tall, Black man” — then they should not be allowed to post at all, Porter explained. The group also suggested that Nextdoor more actively track and monitor crime posts — and zero in on specific users or messages that attract multiple flags for racial profiling.

Neighbors for Racial Justice also proposed “multicultural competence” training for neighborhood leads, so that the moderators would be better equipped to understand and recognize posts that are racist, offensive, or oppressive in some way. The group further suggested that Nextdoor contract with social justice experts to help address the problem.

The Nextdoor representatives seemed receptive to many of the ideas, and Tolia asked the group to follow up with suggestions of specific local racial profiling and training experts that the company could consider for possible partnerships. Tolia told me after the meeting that he would seriously consider adding some kind of form like the one Porter presented.

During the meeting, Tolia noted that in exploring these kinds of changes, the company would, however, have to also consider its mission to protect the privacy of users. Currently, Nextdoor only reads posts when the company is responding to some kind of complaint or conflict — and doesn’t have any proactive monitoring in place. “We don’t sign into these neighborhoods and read what the discussions are,” Tolia said. “If we are choosing to look at a post … we need to have a really good reason why.” He added: “Because we don’t give a lot of explicit guidance when people post things, the thing we’re trying to think about is, how do we start to provide that education?”

Tolia also pointed out that there are challenges in getting people to shift their approach to crime reporting: “With the suspicious behavior thing, there is going to have to be some unlearning of behaviors that people have in their mind and then a relearning of what is appropriate. And that’s what we’ve got to try to influence. But it’s not easy, because people have different senses of what is suspicious behavior and what is appropriate.”

The Neighbors for Racial Justice members emphasized the ways in which racial profiling on Nextdoor makes people of color feel less safe and welcome in their own neighborhoods. And Tolia repeatedly said he took those concerns seriously — and that profiling and the resulting harm runs counter to the company’s broader objectives. “One of our missions is to actually create very constructive dialogue that leads to safer neighborhoods where people feel like they belong,” he said.